Here is How I Learn Anything Within 48 Hours

Wed, 06 Aug 2025

Technical knowledge and expert perspectives from the field.

From Zero to ChatGPT

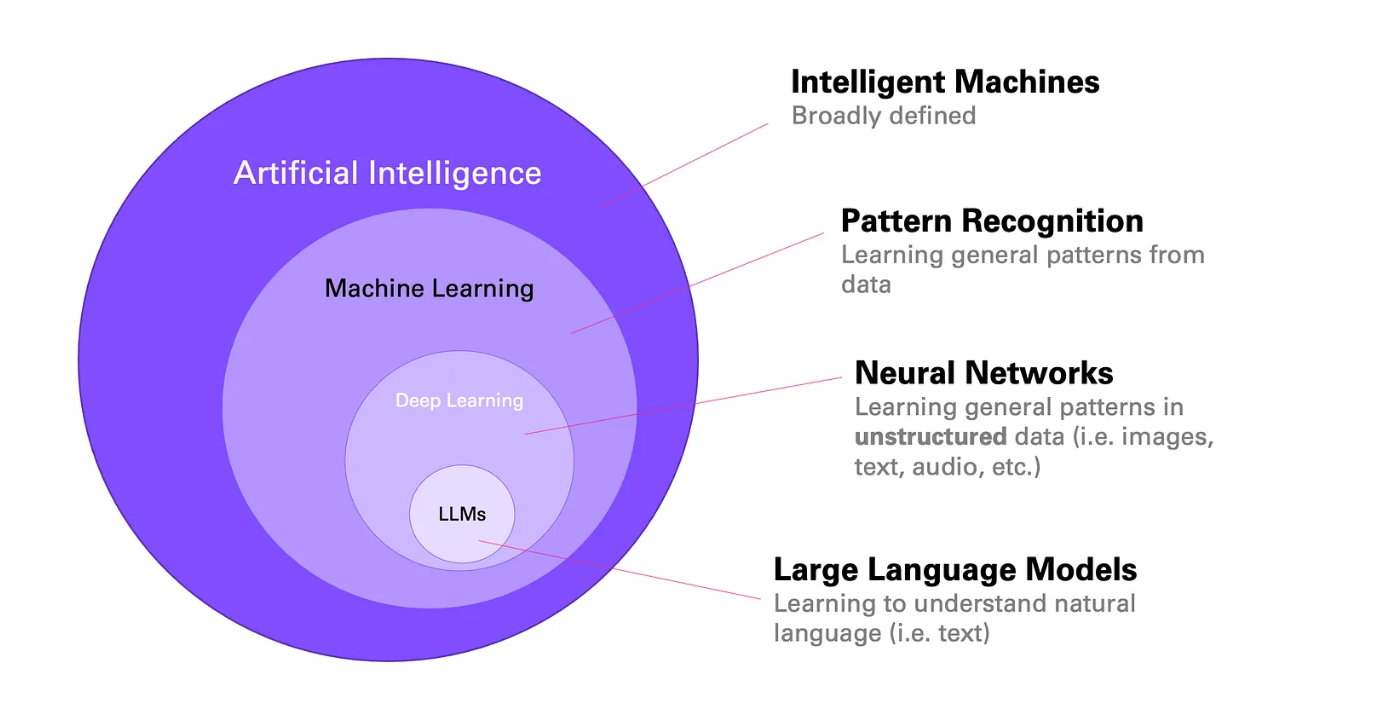

A large language model (LLM) is a computer program that can read, understand, and write text like a person. It learns by looking at huge amounts of text from books, websites, and other sources.

Example:

If you ask, “What’s the capital of France?” the model can answer, “Paris.”

Data Collection: The model is fed billions of words from the internet, books, and articles.

Example: It reads sentences like “The cat sat on the mat.”

Tokenization: The text is broken down into small pieces called tokens.

Example: “unhappiness” becomes “un,” “happi,” and “ness.”

Learning Patterns: The model looks for patterns in how words and sentences are used.

Example: It learns that “good morning” is a common greeting.

Transformer Model: Most LLMs use a design called a transformer.

Example: The model can tell that “bank” means a place for money in “I went to the bank,” but means a river’s edge in “I sat by the bank.”

Attention Mechanism: The model pays attention to important words in a sentence.

Example: In “The dog that chased the cat was fast,” it knows “dog” is the one that was fast.

Layers: The model has many layers of artificial neurons.

Example: Each layer helps the model get better at understanding, like stacking filters to see a clearer picture.

Pre-Training: The model tries to guess the next word in a sentence.

Example: Given “The sky is ___,” it learns to fill in “blue.”

Fine-Tuning: The model is trained on more specific tasks.

Example: It learns to answer questions or summarize news articles.

Human Feedback: People review the model’s answers and give feedback.

Example: If the model says “Paris is in Germany,” a person corrects it.

Input: You type a question or prompt.

Example: “Write a poem about rain.”

Processing: The model turns your words into tokens and runs them through its layers.

Prediction: It predicts the next word, then the next, until it forms a complete answer.

Example: “Rain falls softly on the ground, making a gentle, soothing sound.”

Output: You get a response that sounds natural and makes sense.

ChatGPT: This is a chatbot built on top of a large language model called GPT.

Example: You ask, “Can you help me with my homework?” and it gives you tips or answers.

Improvements: Newer versions can handle more complex tasks and longer conversations.

Example: They can write stories, solve math problems, or explain science topics.

LLMs learn by reading lots of text.

Example: They read millions of sentences like “Birds can fly.”

They use transformers to understand context.

Example: They know “bark” means a dog’s sound or tree covering, depending on the sentence.

Training happens in two main steps: pre-training and fine-tuning.

Example: First, they learn general language; then, they learn to answer questions.

ChatGPT is a popular example that uses these ideas to chat with people.

| Step | What Happens | Example |

|---|---|---|

| Data Collection | Gather billions of words from many sources | Reads “The sun rises in the east.” |

| Tokenization | Break text into small pieces (tokens) | “playing” → “play” + “ing” |

| Pre-Training | Model learns to predict next word | “She drank a cup of ___” → “tea” |

| Fine-Tuning | Model is trained for specific tasks | Learns to answer “What is 2+2?” → “4” |

| Human Feedback | People review and rate answers | Corrects “The earth is flat” to “round” |

| ChatGPT | Model is used in a chatbot for real conversations | Answers “Tell me a joke” with a joke |

Large language models make tools like ChatGPT possible. They help people get answers, write text, and much more.

Wed, 06 Aug 2025

Leave a comment